In this post I will discuss a few ways that you can configure your OpenStack Networking and how that affects your performance. OpenStack Networking/Neutron provides users several configuration options, each of which leads to a different architectural implementation, thereby directly affecting performance. To understand the different ways you can configure Neutron networking, we first need to look at what Neutron ML2 is.

What is ML2?

ML2 stands for Modular Layer 2. It is basically a framework that allows OpenStack to utilize variety of L2 Technologies. There are two kinds of ML2 Drivers.

- Type Drivers

They define how an OpenStack network is technically realized. Examples are VLAN, VxLAN, GRE etc

- Mechanism Drivers

The mechanism driver is responsible for actually realizing the L2 network needed by OpenStack resources. For example, in the case of the ML2/OVS mechanism driver, the L2 networks are realized by an openvswitch-agent running on each compute and networker node to configure Open vSwitch (OVS) on that node.

ML2 Plugins

Some of the popular open source plugins (mechanism drivers) supported in the Rocky release of OpenStack are Ml2/OVS, ML2/OVN and ML2/ODL. As mentioned above, the ML2/OVS mechanism driver utilizes openvswitch-agent running on each node to program and configure the flows needed by VMs on that node. OVN is a network virtualization technology developed by the same community that developed OVS and in the case of OpenStack provides a simpler way to realize virtual networks than vanilla OVS. However, in the case of ML2/OVN it is an agentless architecture unlike ML2/OVS and all of the configuration of OpenvSwitch is coordinated through databases. ML2/OVS uses RPC/RabbitMQ for communication between agents, so in the case of Ml2/OVN we can expect to see lower load on RabbitMQ. We also have ML2/ODL which makes use of the Software Defined Networking Controller OpenDaylight (ODL). In this case, ODL proactively manages flows by talking to the Open vSwitch instance on each node through OpenFlow.

You would think you can choose an ML2 plugin and be done right? No, OpenStack spoils you with even more choices and options!!! The next configuration option we have is to enable Distributed Virtual Routing. You can do this by setting router_distributed to True in neutron.conf . In the case of Legacy ML2/OVS architecture, any traffic that needs to go from one OpenStack subnet to another or needs to SNATed to the outside world, has to make an extra hop to the networker node before it reaches its final destination, be it another compute node (hypervisor) or the internet. That means that if you have VM1 on Neutron Subnet1 and VM2 on Neutron Subnet2, both placed on the same hypervisor, even then for a packet to go from VM1 to VM2 it has to go out of the hypervisor, get routed at the networker node and come back to the hypervisor before ending at VM2. DVR solves this problem by having a router on every compute node. With DVR East-West traffic is directly routed from the compute node and any traffic bound for the outside world from a VM with Floating IP also doesn’t have to ever go to the networker node. However, there are some caveats with Ml2/OVS DVR, in the sense that any traffic to the outside world from VM without a Floating Ip would still need to go through the networker node for SNAT and also IPV6 isn’t currently supported. You can find a great deal of information about how traffic patterns differ from Legacy ML2/OVS to DVR Ml2/OVS by following these links: https://docs.openstack.org/liberty/networking-guide/scenario-classic-ovs.html and https://docs.openstack.org/newton/networking-guide/deploy-ovs-ha-dvr.html. In the case of ML2/OVN and ML2/ODL distributed routing is more natively implemented and now made available as a default.

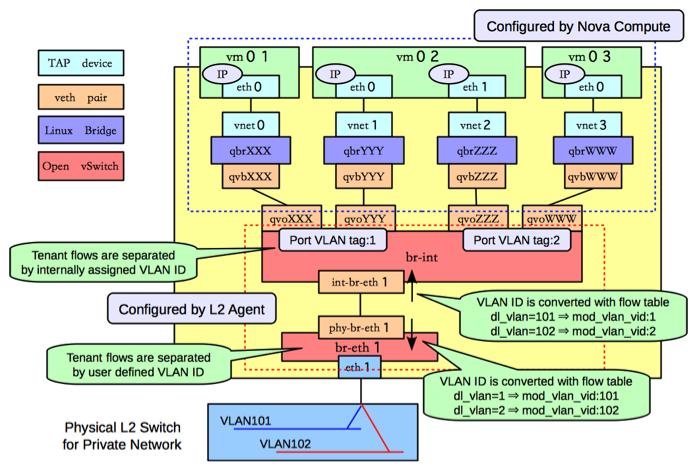

Yet another configuration option is the firewall driver you want to use. It is worth noting that a lot of improvements were made to OpenStack over the years which resulted in more configuration options than one would like. Even when new improvements are made, existing OpenStack deployments in production could continue to use some of the legacy features due to several issues like updates, thereby introducing the improvement as a configuration flag that can be turned on or off. One such configuration option is the firewall_driver in openvswitch_agent.ini that is specific to ML2/OVS. iptables_hybrid has been available for the past several years and more recently openvswitch firewall driver (based on ovs-conntrack, a more native way to implement a firewall) has also been made available. Although the default continues to be iptables_hybrid, openvswitch firewall driver eliminates the need for the linux bridge that exists between the instance and br-int for the sole purpose of implementing security group rules through iptables, and provides a more simplified and performant solution. The figure below illustrates the layout when iptables_hybrid firewall driver is being used. The linux bridge in this picture exists for the sole purpose of implementing security group rules through iptables. By using the openvswitch firewall driver, the need for the linux bridge is eliminated.

Based on all the discussion above, we arrive at different OpenStack Networking architectures by using different combination of the configuration options. I used a tool called shaker to test the performance of each of these solutions. Shaker automatically spawns the required network topology (L2, L3 East-West) with the required neutron networks/routers and the virtual machines, through heat. Shaker orchestrates the test run to capture network performance data using standard tools such as iperf, iperf3, netperf and flent.

Performance Comparison

Although, all the three ML2/Plugins discussed above use Open vSwitch as the virtual switch, the flow pipeline they program differs, leading to differences in performance. Adding DVR and firewall drivers to the mix, we see a range of performance differences between the solutions. We will compare the TCP STREAM throughput, TCP Request-Response (for latency) and UDP packets/sec in the following six scenarios.

| Legacy ML2/OVS Iptables Firewall |

| Legacy ML2/OVS Openvswitch Firewall |

| DVR ML2/OVS Iptables Firewall |

| DVR ML2/OVS Openvswitch Firewall |

| ML2/OVN |

| ML2/ODL |

The four different traffic scenarios we will use to compare each of these solutions are:

- L2 Same Compute: Sender and Receiver VMs are on same neutron network and are placed on the same hypervisor

- L2 Separate Compute: Sender and Receiver VMs are on same neutron network and are placed on the different hypervisors

- L3 East-West Same Compute: Sender and Receiver VMs are on different neutron networks and are placed on the same hypervisor. Note that if using DVR in this case, traffic will never have to leave the compute node as the router is also present on the compute node

- L3 East-West Separate Compute: Sender and Receiver VMs are on different neutron networks and are placed on the different hypervisors

Note that having/not having DVR does not affect L2 scenario results, as DVR is for L3 only. Any difference seen between Legacy and DVR in L2 scenarios is just due to variance from one test run to another.

VXLAN type driver is used for ML2/OVS and ML2/ODL, whereas Geneve type driver is used for ML2/OVN. So, nodes are connected by VXLAN tunnels in the case of ML2/OVS and ML2/ODL whereas Geneve tunnels are used in the case of ML2/OVN.

The deployment was made using TripleO Rocky release and network isolation was used. The tenant traffic between nodes goes over a 10G link in the test setup.

TCP STREAM

Quick Observations

In the case of L2 same compute node, ML2/OVS with openvswitch firewall driver performs the best. In the case of L2 separate compute node, ML2/OVN performs better than all other solutions under consideration. Legacy ML2/OVS with/without openvswitch firewall driver performs poorly in L3 East-West scenarios. DVR helps ML2/OVS but Ml2/OVN and ML2/ODL still dominate

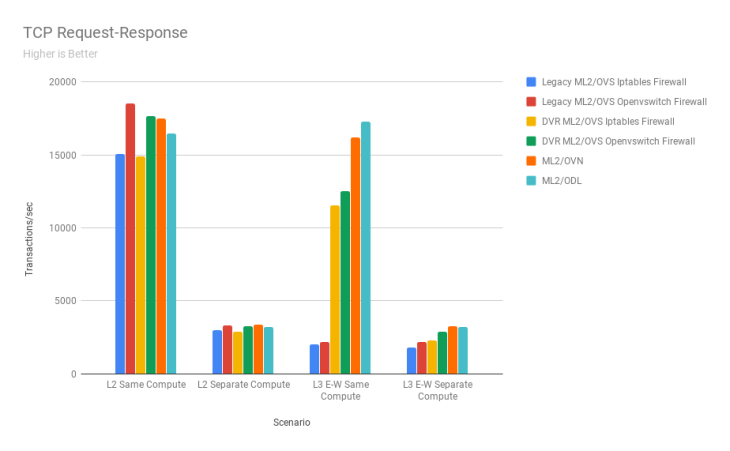

TCP Request-Response

This test helps gauge the latency.

Quick Observations

ML2/OVS with openvswitch firewall driver performs better than ML2/OVN and ML2/ODL in the case of L2 same compute. In the case of L2 separate compute node, ML2/OVN marginally outperforms the other solutions. In the L3 East-West same compute scenario ML2/OVN performs the best with ML2/ODL coming a close second. A similar trend is seen in the L3 East-West separate compute scenario.

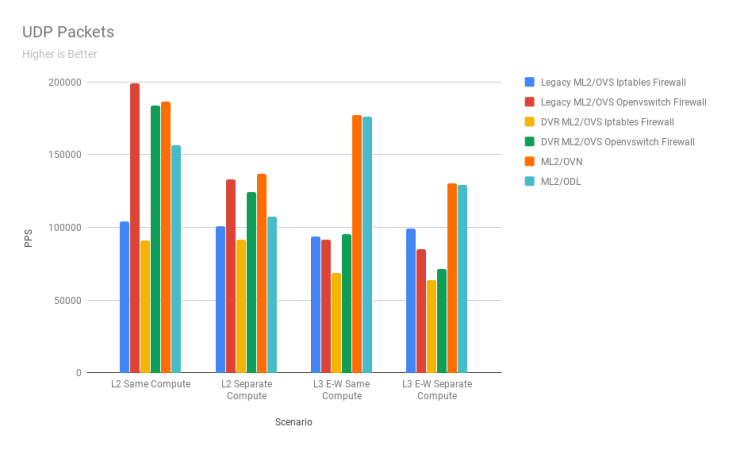

UDP Packets/Sec

Quick Observations

ML2/OVS continues to dominate in the L2 same compute scenario even for UDP traffic. ML2/OVN comes in the first place for L2 separate compute traffic, with ML2/OVS with openvswitch firewall driver coming in a close second. ML2/OVN and ML2/ODL dominate the L3 East-West same compute node scenario. A similar trend is seen in the L3 East-West separate compute scenario.

Summary

We looked at some of the different Neutron implementations and compared their performance. In all cases except L2 same compute, ML2/OVN and ML2/ODL dominate. Ml2/OVN is the overall best performer and even in cases where the difference between ML2/OVN and ML2/ODL is small, ML2/OVN is a more attractive solution due to its relatively low resource footprint.

Thanks for writing this. Very helpful

Keep writing such blogs

LikeLiked by 1 person

Thanks a lot,

This is one of the best helpful posts that I see in this field.

LikeLike